It seems that swish is not powerful as I expect, and swish need about 20% extra time to train.Īlso compare with their training history, relu seem got better training curve. If swish really better than relu? swish confusion matrix fit( x_train, y_train, batch_size = 100, epochs = 100, validation_data =( x_test, y_test) compile( optimizer = adam, loss = 'binary_crossentropy'Ĭlassifier. add( Dense( units = 1, activation = 'sigmoid', kernel_initializer = 'uniform'))Ĭlassifier. Swish activation function Significant improvement in classification accuracy is evidenced with NASNet-A 45 and Inception-ResNet-v2 46 deep networks on. add( Dense( units = 5, activation = swish, kernel_initializer = 'uniform'))Ĭlassifier. add( Dense( units = 10, activation = swish, kernel_initializer = 'uniform'))Ĭlassifier. add( Dense( units = 25, activation = swish, kernel_initializer = 'uniform'))Ĭlassifier. add( Dense( units = 50, activation = swish, kernel_initializer = 'uniform'))Ĭlassifier. add( Dense( units = 10, activation = swish, kernel_initializer = 'uniform', input_dim = 10))Ĭlassifier. utils import class_weight from keras import backend as K def swish( x): layers import Dense, Activation from keras import optimizers from sklearn. But unlike ReLU however it is differentiable at all points. The shape of the Swish Activation Function looks similar to ReLU, for being unbounded above 0 and bounded below it. The proposed DNN digital predistortion (DPD) uses Swish or Sigmoid-weighted linear unit activation function instead of sigmoid and rectified linear units. Swish Activation Function is continuous at all points. from publication: Artificial Intelligence-Based Drone System for Multiclass Plant Disease Detection Using an.

SWISH ACTIVATION FUNCTION DOWNLOAD

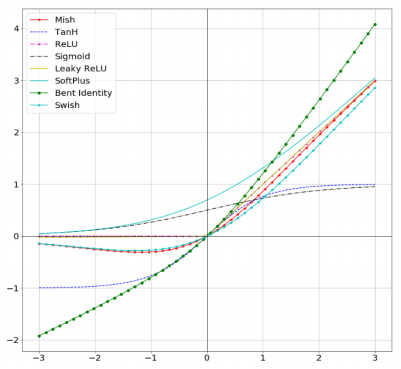

Produce activations instead of letting them be zero, when calculating the gradient.From keras. Swish is a continuous, non-monotonic function that outperforms ReLU in terms of the Dying ReLU problem. Download scientific diagram Comparative analysis of different activation functions.It is showing some remarkable performance increase in the networks like Inception-ResNet-v2 by 0.6 and Mobile NASNet-A. Produces negative outputs, which helps the network nudge weights and biases in the right directions. Recently, Google Brain has proposed a new activation function called Swish.From the above figure, we can observe that in the negative region of the x-axis the shape of the tail is different from the ReLU activation function and because of this the output from the Swish activation function may decrease even when the input value increases. The plot for it looks like this: The ELU activation function differentiatedĪs you might have noticed, we avoid the dead relu problem here, while still keeping some of the computational speed gained by the ReLU activation function – that is, we will still have some dead components in the network. According to the paper, the SWISH activation function performs better than ReLU.

SWISH ACTIVATION FUNCTION PLUS

The output is the ELU function (not differentiated) plus the alpha value, if the input $x$ is less than zero. The y-value output is $1$ if $x$ is greater than 0.

SWISH ACTIVATION FUNCTION CODE

I show you the vanishing and exploding gradient problem for the latter, I follow Nielsens great example of why gradients might explode.Īt last, I provide some code that you can run for yourself, in a Jupyter Notebook.įrom the small code experiment on the MNIST dataset, we obtain a loss and accuracy graph for each activation function The choice of activation functions in deep networks has a significant effect on the training dynamics and task performance. The goal is to explain the equation and graphs in simple input-output terms. The experiments show that Swish tends to work better than ReLU on deeper models across a number of challenging datasets, and its simplicity and its similarity to ReLU make it easy for practitioners to replace ReLUs with Swish units in any neural network.

I will give you the equation, differentiated equation and plots for both of them. In this extensive article (>6k words), I'm going to go over 6 different activation functions, each with pros and cons. There are eleven activation functions i.e Binary Step Function, Sigmoid, hyperbolic tangent (Tanh), Rectified Linear Unit (ReLU).

0 kommentar(er)

0 kommentar(er)